Technology

Generative AI and cyber attacks: Time to amplify your security

Wherever you are located and whatever industry you are in, you have certainly been unable to avoid discussions about ChatGPT and other generative AI tools, with proponents, opposers, middle-of-the-roaders and the downright bewildered all having their say.

Whichever way you look at it, generative AI is one of the great new disruptors of our age, capable of producing various types of content–copy, audio, video and images – out of existing content in a matter of seconds. The latest advancements in this artificial intelligence technology mean it can assist in a variety of sectors and scenarios, saving time and money.

Yet it simultaneously causes concern, because it can be equally applied to endless negative, illicit, and nefarious uses. Take cyber threats, for instance. These are set to increase exponentially thanks to generative AI, which makes it child’s play to create and automate email phishing. In this kind of attack, hackers create spoof emails that appear to be genuine, to trick the recipient into revealing valuable information such as bank account details, passwords, and so on.

Attackers are using generative AI to build new phishing emails that are clear, engaging and convincing. It is now simpler and faster for them to generate email texts that are compelling and credible, as well as grammatically correct.

90% of all cyberattacks start with phishing attacks

They can even use just a few pieces of publicly information to roll out messages that are individually targeted, known as spear phishing, which makes it even harder for the recipient to recognise them as scams. Armed with something as simple as the email address of a potential victim, it is quick and easy for the AI system to search the web for information on that’s person’s qualifications, for example.

Threat actors can use generative AI to create endless variations of spear phishing messages in a very short time, and to target many different individuals. Additionally, generative AI can continuously optimise itself through machine learning algorithms. This way, it can automatically evaluate the success rates of phishing attacks and tweak and adapt subsequent emails accordingly.

And this becomes all the more alarming when you realise that 90% of all cyberattacks start with phishing attacks, as our research has shown.

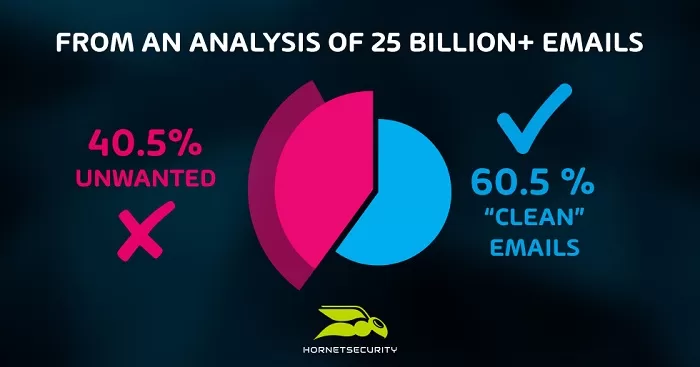

Our Cyber Security Report 2023 has also revealed that more than 40 percent of all email traffic poses a potential threat. This is likely to increase as attackers use generative AI to quickly develop further cyberattacks. It is therefore essential for businesses to implement the right security solutions to protect their employees from sophisticated AI-based spear phishing attacks, as well as taking steps to create greater awareness.

AI-supported cybercrime

Governments too are feeling the impact of generative AI. AI is at the heart of deep fake technologies, where voice phishing (vishing) attacks use voice and video. A major vishing instance occurred last year in the form of a video call between the then governing mayor of Berlin, Franziska Giffey, and a fake Kyiv mayor, Vitali Klitschko. Experts believe that Klitschko’s face was arbitrarily moved using AI. This would have taken several hours of working using conventional AI models – but the newer generative AI tools reduce this to just a few minutes.

While some government-focused attacks aim to spread misinformation or propaganda, others are intended to sabotage Critical Information Infrastructures Security (CRITIS) systems. This was the case with the recent Russian attacks on the Ukrainian power grid.

Cyber espionage is another factor, with the goal of stealing military, political or economic information – and even funds, such as cryptocurrencies. There are also hack-and-leak attacks which seek to obtain incriminating material about the intended victims.

In response to these ongoing threats, the Italian government banned ChatGPT with ‘immediate effect’, stating that it infringes key EU GDPR laws and poses great privacy concerns. The UK government plans to impose guidelines on AI for “responsible use”.

The importance of security awareness among employees

Businesses would be wise to follow suit and prepare for a new wave of AI-supported cybercrime.

It is critical that companies provide their employees with security awareness training, with a special focus on three key pillars: mindset – skillset – toolset:

- Mindset involves raising employees’ cybersecurity awareness, enhancing both their efficacy and also their level of personal responsibility in being part of the solution as a ‘human firewall’.

- Skillset includes awareness training that combines theoretical forms of learning, such as e-learning or classroom training, with realistic spear phishing simulations. By being served various phishing attacks as part of an ongoing training plan, employees become used to taking quick, impulsive decisions to block the threats. This counteracts the often-thoughtless clicks on phishing emails that can lay a company open to malicious attacks.

- Toolset incorporates the processes and tools that strengthen employees’ security behaviour; for example, password managers that prevent users from opting for the same login data for accounts.

Building a sustainable security culture

Yet, our studies show that only one in three organisations provide cybersecurity awareness training to employees – and, on average, it takes employees three months of training to reach the ‘protection zone’. With this rapid development of new AI technologies, it is key for businesses to establish a security culture and prioritise good cybersecurity practices.

This is where our fully automated Security Awareness Service, comes. It is designed to address sophisticated spear phishing attacks. As part of the service, e-training is assigned to employees on an ongoing basis, measuring security behaviour using our unique ESI® metric. This training and phishing simulator automatically adapts to each individual.

Of course, the use of a next-generation email security solution is critical to combat all kinds of cyber threat and that is what our flagship product, 365 Total Protection, does. Coupled with the provision of security awareness services and training to employees, this helps to foster a well-rounded and sustainable security culture that is equipped to deal with cyber threats from current – and new – AI features, based on the ‘mindset – skillset – toolset’ triad model.

Whatever one’s individual views on generative AI, these precautionary steps are a mustto equip businesses against the rise of these new technologies. As businesses, for our critical data to remain safe, so must our security awareness.

Daniel Hofmann is founder and CEO of Hornetsecurity

Daniel Hofmann is founder and CEO of Hornetsecurity. He has been an independent entrepreneur and security industry influencer since 2004. He has held several positions in the IT market. In 2007, he founded Hornetsecurity in Hannover, Germany, and developed services for secure email communication, including spam and virus filters. Under his guidance, Hornetsecurity has developed a comprehensive portfolio of managed cloud security services that serves customers globally from its regional offices around the world. He is responsible for strategic corporate development.