Technology

Apple’s £1 Million Challenge: Can You Hack Its Most Secure AI Server?

- Apple is offering up to £800,000 for researchers who can compromise its new AI cloud server infrastructure, marking one of the most ambitious bug bounty programmes to date.

- With Apple Intelligence features rolling out on iOS 18 and beyond, this initiative directly impacts how your data is processed, encrypted, and deleted in real time.

Imagine a tech giant handing over the keys—well, almost—to one of its most tightly guarded systems and daring the world to break in. That’s not just a plot from a cyber-thriller; it’s Apple’s real-world experiment with transparency, security, and AI accountability. In a surprisingly open move, the famously secretive company is laying its code bare, offering up to £800,000 to researchers who can compromise its brand-new Private Cloud Compute (PCC) servers, the very backbone of its AI infrastructure introduced in iOS 18.1.

So why this bold step now? And what does it mean for your data, your phone, and the future of privacy-first technology? Let’s unpack the details.

Primary Keywords:

- Apple Private Cloud Compute

- Apple AI security UK

- iOS 18.1 privacy features

- Apple’s Intelligence data protection

- Apple bug bounty 2025

Secondary Keywords:

- cloud AI privacy infrastructure

- iOS 18.1 PCC security

- Apple AI encryption cloud

- bug bounty cybersecurity Apple

- Apple data privacy UK

What Exactly Is Apple’s Private Cloud Compute?

Think of it as a safety valve for Apple’s AI, designed to handle the tasks your iPhone can’t process locally. Most of Apple Intelligence runs on-device, but when a job gets too complex, it’s sent to the cloud. Not just any cloud, though—Apple’s very own.

The PCC system is built on Apple’s custom Silicon chips and runs a heavily modified version of iOS optimised for security. It deletes user data immediately after a task is complete and offers code transparency so that security researchers can see what’s under the hood. There’s no permanent storage, no profiling, and no third-party access. It’s Apple’s way of making sure your information passes through, not lingers.

So… What’s This Million-Pound Dare?

Apple is expanding its Security Bounty programme to test just how impenetrable the PCC is. Researchers are invited to explore a specially created Virtual Research Environment—essentially a sandbox version of PCC—and attempt to find weaknesses.

The rewards? They range from £40,000 for minor issues, like unintended data leakage, up to £800,000 for full system-level breaches. Apple is even offering tools and access to help ethical hackers give it their best shot.

In tech speak, this is as close to an open-book exam as it gets—and Apple’s daring researchers are to ace it.

Why Should You Care?

The way your iPhone processes personal data—your messages, photos, voice notes, and location—is changing. With AI deeply integrated into everyday interactions, where and how this processing happens matters more than ever.

Apple claims that only minimal data ever leaves your device, and when it does, it’s encrypted in transit, processed briefly, and then deleted. No logging. No ID tags. No stored records.

This privacy-first approach stands in contrast to more cloud-reliant competitors, and for users in regions like the UK and EU, where data protection laws like GDPR are foundational, Apple’s design could set the standard.

The Bigger Picture: Apple vs. The Rest

Where Apple zigged, others zagged. While Meta’s LLaMA, Google’s Gemini, and OpenAI’s ChatGPT rely heavily on cloud computation, Apple’s AI ambitions are embedded on your device—local, transient, and tightly controlled.

Even when data must go to the cloud, it’s stripped of identifiers, encrypted, and deleted immediately after use. It’s a privacy model designed for trust, and Apple knows trust is the new currency.

By offering a reward to anyone who can prove them wrong, Apple isn’t just talking the talk—it’s putting money on the line.

Why Cybersecurity Experts Are Watching Closely

Security researchers are often at odds with big tech firms, hunting for vulnerabilities that may or may not be acknowledged. Apple’s decision to proactively open up its system, provide tools, and welcome scrutiny is unprecedented for the company.

The transparency of this challenge is drawing global attention. For those in cybersecurity, it’s an opportunity to benchmark one of the most secure commercial AI environments ever designed. And for Apple, it’s a stress test wrapped in a million-dollar bow.

What’s Happening Right Now?

The bounty programme is live. Researchers from Europe, North America, and Asia are already deep-diving into Apple’s virtual system, looking for cracks.

And while your everyday iOS update might not reflect this effort visibly, the behind-the-scenes work could determine the trustworthiness of future AI systems, not just from Apple, but from every tech company watching this unfold.

What’s Next for Apple Intelligence?

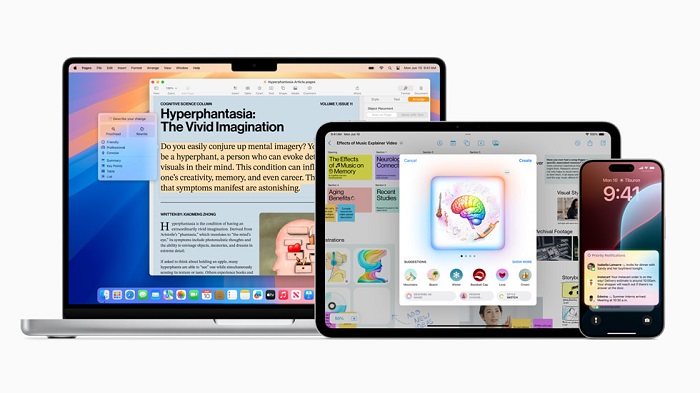

Apple’s suite of AI tools is rolling out across iPhones, iPads, and Macs in 2025, bringing features like:

- Smart writing assistance and summarisation tools

- Context-aware Siri interactions

- Personalised suggestions and smart replies

Every one of these is backed, when needed, by PCC—processing your request with an invisibility cloak of encryption, then vanishing it entirely once complete.

Privacy, AI, and Apple’s High-Stakes Bet on Trust

For tech startups, data scientists, and privacy advocates around the world, Apple’s Private Cloud Compute isn’t just a new product launch—it’s a clear signal. In placing privacy at the centre of its AI infrastructure and inviting the public to test that promise, Apple is challenging a core tension in modern computing: can powerful machine learning coexist with meaningful data protection?

By choosing local-first processing, transparent systems, and temporary cloud use with no residual data, Apple has drawn a new line in the sand—one that will not only influence rivals but may also inspire regulatory frameworks, especially across privacy-conscious regions like the UK and EU. Brands, both large and emerging, that rely on consumer trust will now have a benchmark to look toward.

And as the £800,000 bug bounty continues to draw scrutiny and participation, the industry is watching closely. Whether Apple’s fortress holds or falters, one thing is certain—how we think about trust, data, and AI won’t be the same again.